Gain control over your growing GenAI infrastructure.

Scale GenAI securely and reliably. Eliminate downtimes and minimize costs.

LLMGW is an enterprise-grade AI gateway that provides centralized management, security, and optimization for Large Language Model operations. Built for organizations requiring robust governance, compliance, and cost control over their AI infrastructure, LLMGW seamlessly integrates with leading AI providers including OpenAI, Azure OpenAI, and Anthropic.

LLMGW enables organizations to harness AI capabilities while maintaining the security, compliance, and operational standards required for enterprise environments.

Unified AI Operations Management

Provider-agnostic API: Single interface across all supported AI providers

Intelligent routing: Automatic workload distribution based on performance, cost, and availability

High availability: Built-in failover and redundancy mechanisms

Multi-region support: Global deployment with configurable data residency

Enterprise Security & Compliance

Data protection: End-to-end encryption with configurable residency controls

Audit & governance: Comprehensive logging with immutable audit trails

Access control: Fine-grained RBAC with Active Directory integration

Cost Optimization & Control

Usage analytics: Granular tracking by user, project, and department

Budget management: Automated controls with customizable spending limits

Financial transparency: Real-time cost monitoring with detailed reporting

Chargeback capabilities: Department-level allocation and cost attribution

Operations & Monitoring

Performance insights: Real-time dashboards and SLA tracking

Quality assurance: Output monitoring with customizable validation rules

Azure integration: Native support for Azure Monitor and Application Insights

AWS integration: Native support to CloudWatch

Reliability patterns: Circuit breakers, automatic retries, and health checks

Platform Management

Configuration as code: Version-controlled deployment configurations

Zero-downtime deployments: Blue-green deployment strategies

Administrative portal: Centralized management interface

Lifecycle management: Safe rollbacks and environment promotion

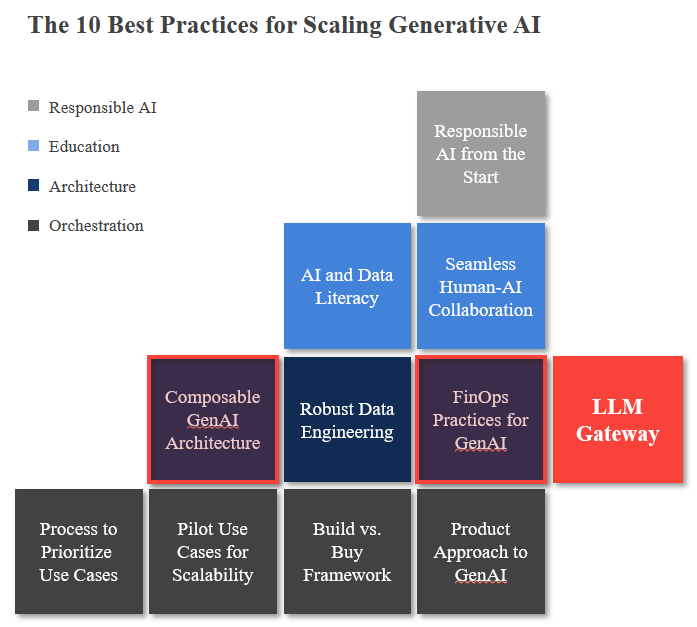

Best Practice

LLM Gateway addresses key architectural best practices for scaling generative AI across the enterprise.